The Robotics Locomotion and Control (RLC) Lab is led by an assistant professor and includes 5 PhD and 6 Master’s students, 18 highly motivated undergraduate students from diverse academic backgrounds. What unites us is a shared passion for robotics and a strong commitment to hands-on, interdisciplinary research.

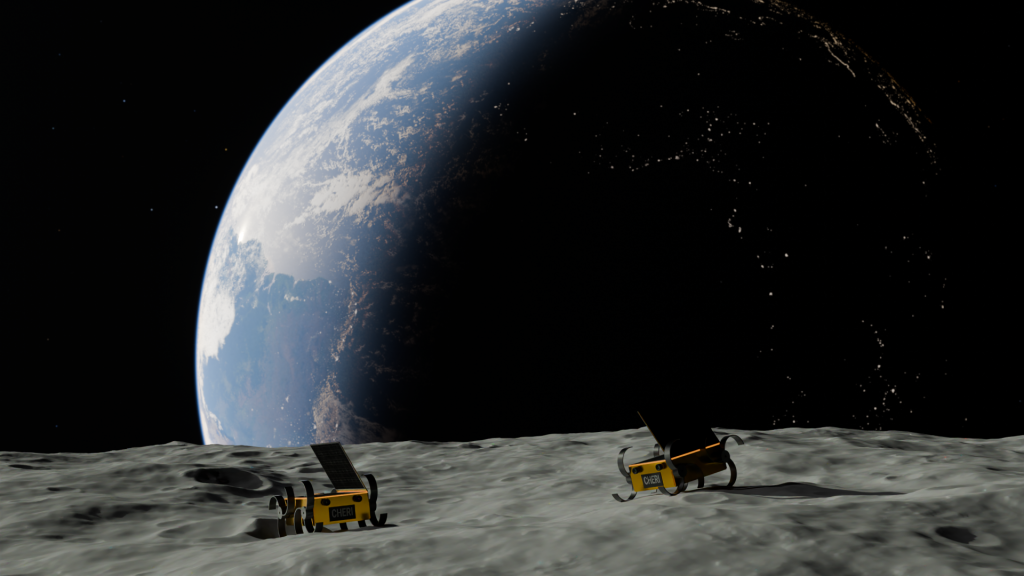

We specialize in a wide range of areas, including robotics, computer vision, perception, control, navigation, and sensor fusion. Our work spans across various autonomous platforms—ranging from unmanned ground, surface, and underwater vehicles to planetary rovers capable of navigating challenging and unstructured environments as in the CHERI Rover project we are conducting.

Driven by collaboration, engineering creativity, and scientific curiosity, we aim to push the boundaries of intelligent systems and contribute to the advancement of robotics and autonomous technologies.

Our State-of-the-Art Facilities

At the Robotics Locomotion and Control (RLC) Lab, we are equipped with advanced facilities that provide the perfect environment for testing and developing innovative robotics technologies. Our specialized labs are designed to simulate real-world conditions, ensuring that our research is both highly accurate and applicable to a variety of challenging environments.

Motion Capture Laboratory

Our motion capture facility is equipped with the latest technology to track and analyze the movements of robots with precision. This space enables detailed study of locomotion dynamics, providing critical data for improving control systems and optimizing robot performance in complex environments.

Robotic Mobility Arena

The off-road arena is a dedicated testing ground featuring a range of obstacles, including bridges, tunnels, and uneven terrains. It serves as a perfect environment for testing autonomous ground vehicles and rovers, pushing their limits in navigating rough and unpredictable landscapes. The arena is also paired with a custom indoor 3D localization system, allowing for precise tracking of vehicles and enhancing their navigation capabilities.

Terrain Simulator

Our unique sand landscape creation mechanism is capable of simulating a wide variety of terrains—from Earth’s rugged deserts to the rocky, barren surfaces of the Moon and Mars. This system allows us to create controlled, dynamic environments for testing robots in realistic, extraterrestrial-like conditions, providing invaluable insights into how autonomous systems can perform in such landscapes.