ONGOING PROJECTS:

Project 1: Adaptive Path Planning and Dynamic Obstacle Avoidance

This multidisciplinary project focuses on advancing autonomous navigation and perception systems for unmanned vehicles operating in complex environments—both on land and at sea. Our work involves the design, development, and validation of motion planning algorithms, real-time perception, and efficient data handling solutions for unmanned surface and ground vehicles, as well as airborne systems equipped with LIDAR technology.

For autonomous unmanned surface vehicles (USVs), we are developing a dedicated test platform within our laboratory. This includes constructing a specialized test pool to simulate various wave conditions and using an OptiTrack motion tracking system to ensure accurate trajectory analysis. Our primary research objectives include designing and validating autonomous motion planning strategies for operations such as harbor docking and cargo transfer.

In the context of unmanned ground vehicles (UGVs), we are focused on collision-free motion planning in 2D environments that include both static and dynamic obstacles. Our approach is structured in three phases. Initially, we utilize funnel-based path planning to ensure safe navigation in static environments. Subsequently, we implement a probabilistic dynamic obstacle avoidance method using Kalman Filters to predict obstacle trajectories and assess collision risks. Finally, a potential field-based motion planning algorithm is employed to guide the vehicle to its target, with temporary local minima handled by brief waiting strategies until the path becomes clear.

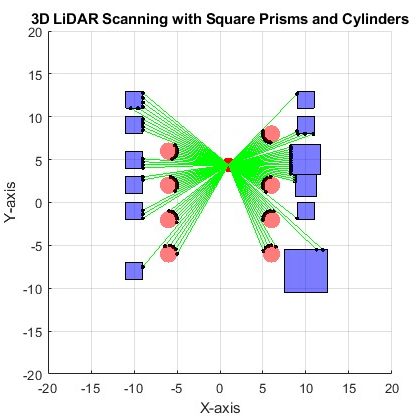

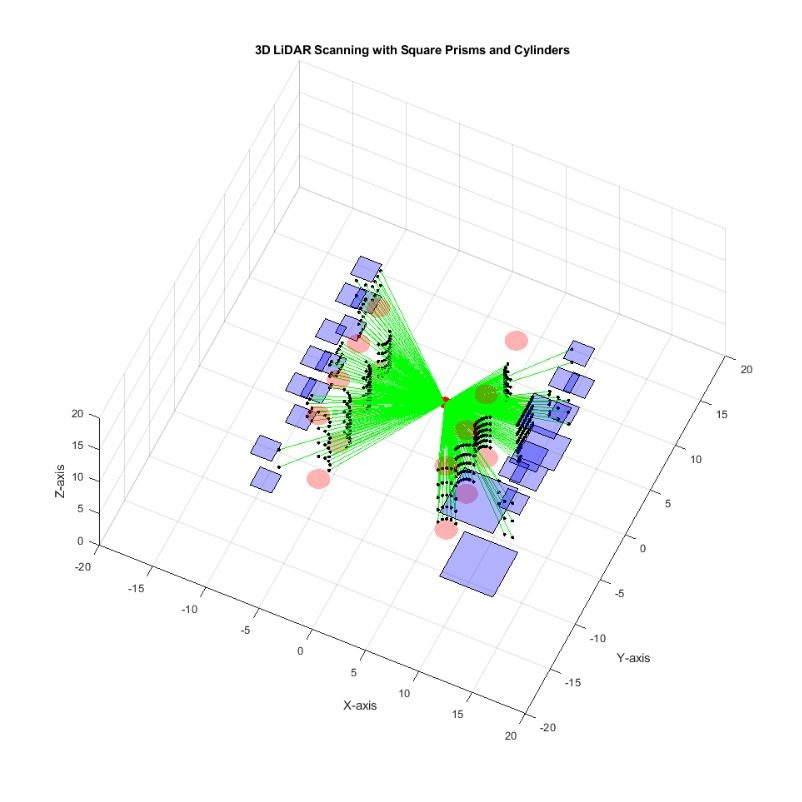

Complementing these efforts, our research also tackles the challenges posed by the large volumes of LIDAR data collected in high-speed, unmapped environments—particularly in airborne systems. We focus on segmenting and compressing this data to facilitate efficient real-time processing and transmission over limited-bandwidth communication channels. By estimating object shapes and reconstructing environments from LIDAR data, we enable real-time Simultaneous Localization and Mapping (SLAM), enhancing the autonomous capabilities of the vehicle while maintaining efficient system performance.

Through this integrated effort, we aim to contribute robust solutions for motion planning, environmental understanding, and data management in next-generation autonomous systems.

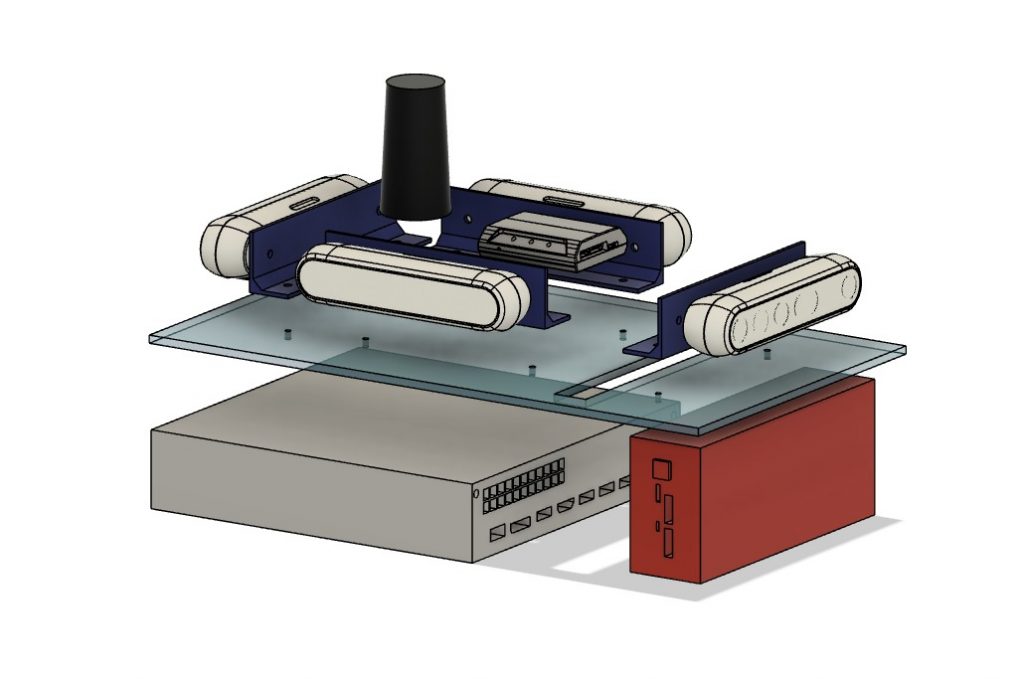

Project 2: Visual-Inertial Odometry Sensor Fusion Box

This project aims to construct a comprehensive dataset for visual-inertial odometry (VIO) tasks using various robotic platforms within our lab, including wheeled, legged, and underwater vehicles. The objective is to develop and test VIO algorithms in diverse and challenging environments. The sensor fusion box will integrate multiple RGB-D cameras and a GPS sensor, enabling data collection from platforms of varying scales—from miniature robots to large vehicles, including cars and humans.

Additionally, we plan to implement the MSC-KF (Multi-State Constraint Kalman Filter) algorithm in real-time on our platform. This will allow us to not only gather data during each test but also produce an estimation of the vehicle’s trajectory. These real-time estimations can serve as valuable references for future studies and facilitate comparisons with other algorithms.

–> Phase 1: LIDAR Data Segmentation and Compression for Real-Time Environmental Reconstruction

Recent advancements in LIDAR technology have enabled the collection of rich, dense environmental data, especially in airborne systems. However, the large volumes of data generated from unmapped regions, driven by the speed of the vehicle, pose significant challenges in real-time environmental reconstruction. This vast amount of data, if left unprocessed, can overwhelm both the onboard processing system and the communication channels used for transmitting the information. To address this, our project focuses on segmenting and compressing LIDAR data to enable efficient transmission and processing through communication channels with limited bandwidth. By estimating the shapes of surrounding objects from the LIDAR data, we aim to reconstruct the environment and perform SLAM (Simultaneous Localization and Mapping) in real time. Additionally, we explore methods to ensure the data is lightweight enough to be transmitted without overloading the system, thus improving the efficiency of environmental reconstruction and autonomous navigation.

–> Phase 2: Enhancing SLAM with Event Cameras for Active Sensing in Challenging Lighting

Event cameras are visual sensors that respond to changes in light intensity, capturing differential signals over time rather than traditional frame-based images. In this project, we aim to explore the potential of event cameras by creating intentional errors in sensor position to actively sense the environment, similar to the active sensing mechanisms observed in species like the glass knifefish. By leveraging this approach, we plan to enhance existing SLAM (Simultaneous Localization and Mapping) techniques, enabling more robust performance in environments with ultra-low or fluctuating light conditions. This project focuses on overcoming the limitations of standard RGB-D cameras, which struggle with blurriness and focus issues in challenging lighting, to provide improved sensing and environment reconstruction capabilities.

Project 3: Actuation Mechanism of the Glass Knifefish

This project aims to explore and understand the unique actuation mechanism of the glass knifefish, particularly focusing on the dynamics of its elongated fin and the mutually opposing waves generated along this fin. The glass knifefish’s ability to produce undulatory motion through its fin is key to its swimming and maneuvering, and understanding this actuation mechanism can provide insights into bioinspired robotic movement. In the first phase of the project, we successfully constructed and identified a single wave structure generated by the fish’s fin, studying its characteristics and the underlying physical principles. Building on this foundation, phase 2 will delve deeper into the complexities of the wave dynamics, exploring how these opposing waves interact and how they contribute to the fish’s propulsion and control. The ultimate goal is to develop a more comprehensive model of the actuation mechanism, which can be applied to the design of bio-inspired robotic systems capable of mimicking the fish’s efficient and versatile movement in aquatic environments.

–> Phase 1: Active Sensing and Environment Reconstruction in Glass Knifefish

This project investigates the active sensing behavior of the glass knifefish (Eigenmannia virescens), which uses its longitudinal fin and electromagnetic sensory system to navigate and interact with its surroundings. The fish employs a dynamic sensing mechanism, constantly adjusting its movements to gather sensory information, especially when error dynamics occur. This study focuses on the relationship between the fish’s visual and electromagnetic sensory systems, with experiments conducted in dark and light conditions to observe the interplay. Using a test setup with a high-speed camera and a linear track refuge, we aim to simulate the fish’s behavior by driving the error to nonzero, replicating its active sensing mechanism, and reconstructing the environment model the fish perceives. The research also models the trade-off between exploration (increasing sensory input) and exploitation (tracking a target) within the fish’s movement patterns.